AWS lambda is a core component of a wide range of software designs, as an advantage of lambda service, we can focus on its simple and efficient integration with other services in the AWS ecosystem. The Amazon SQS is an old-school member of this ecosystem that offers a highly scalable messaging queue service and integrates perfectly with AWS lambda.

Queue offering

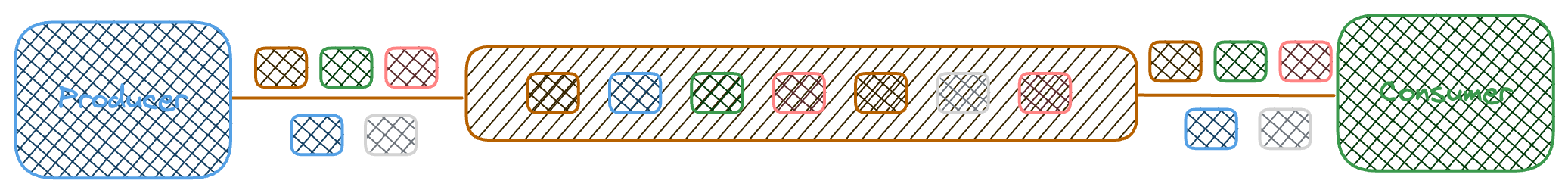

The queuing services are used to decouple the software systems by their ability to act as an asynchronous transitional service to send messages from one system to another and let the producers and consumers be decoupled and independent.

In a queueing system, the producers write the messages to the queue, and the consumers fetch the messages from the queue process, and the message gets removed when no longer needed. by default, the queuing services keep the message as long as there is no removal of demand from consumers.

This design allows the producers and consumers to be independent and decoupled in terms of processing capacity, and manage better their internal state in an isolated way such as managing eventual failures, scaling, etc.

Event vs Job Consumer

Amazon SQS like any queuing service offers the basic functionality of message retrieval by consumers, this is often done by some sort of batch jobs or instances that periodically ask for messages. Any consumer can ask for removal at the end of processing. On the other hand, event-based consumption is more of a serverless concept that offers to send messages to the consumers per message availability, Amazon SQS being a serverless messaging service does not offer event-based message distribution unlike Amazon SNS and works with a job consumer approach.

Source Code

AWS Lambda / SQS

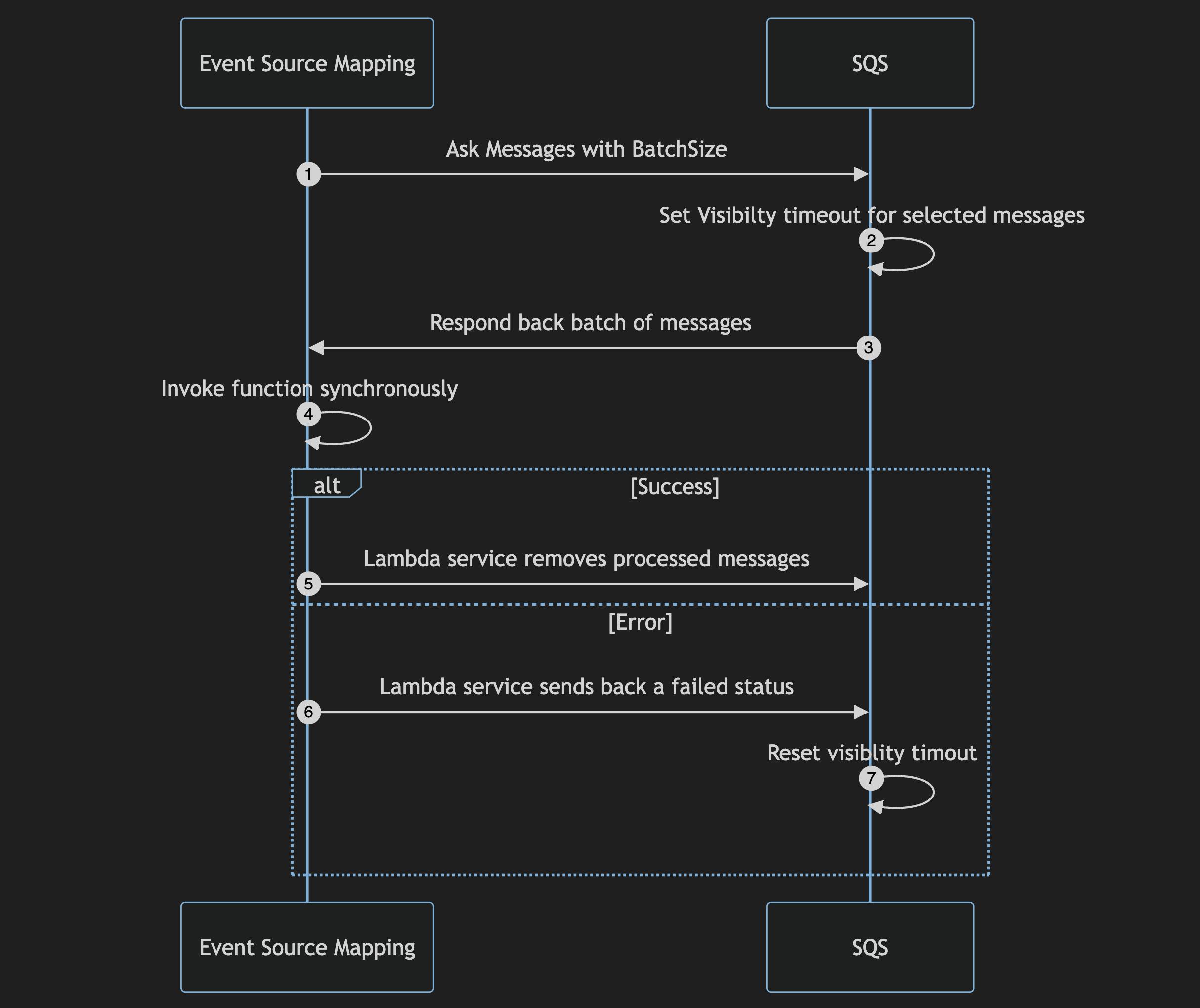

AWS lambda integrates with SQS and this integration offers smooth event-based consumption but this impression is by the excellent way that AWS lambda manages this integration. How it works behind the scenes is that the Lambda service asks for a batch of visible messages in the queue and will manage the consumption and message lifecycle on its own, for sure the principal management of messages inside the queue is under SQS ownership like visibility timeout, delays, etc.

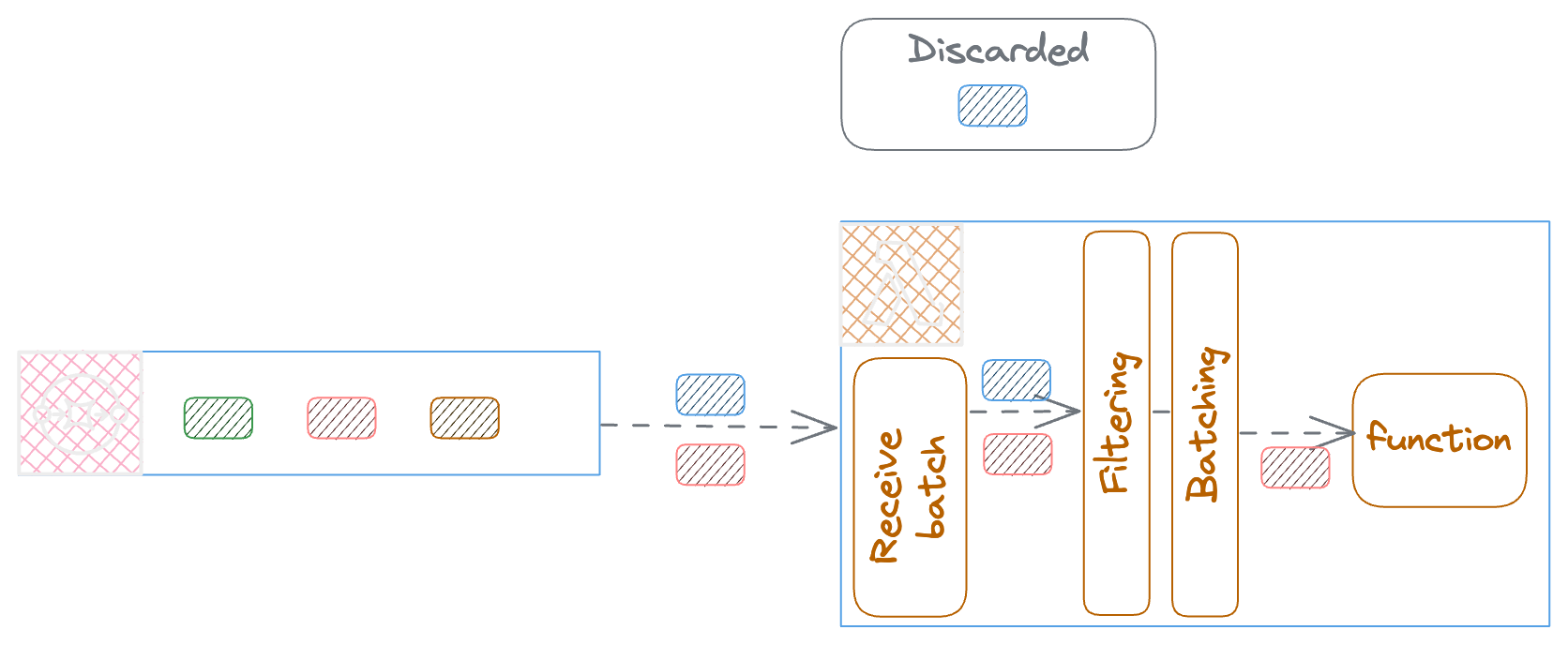

The lambda poller receives the messages from SQS, giving the desired maximum number of messages and the maximum wait time to let the messages be gathered.

Consequently, in lambda event source mapping, the filtering will be applied followed by a process of batching to prepare the batch of records per function configuration before invoking the function.

Filtering

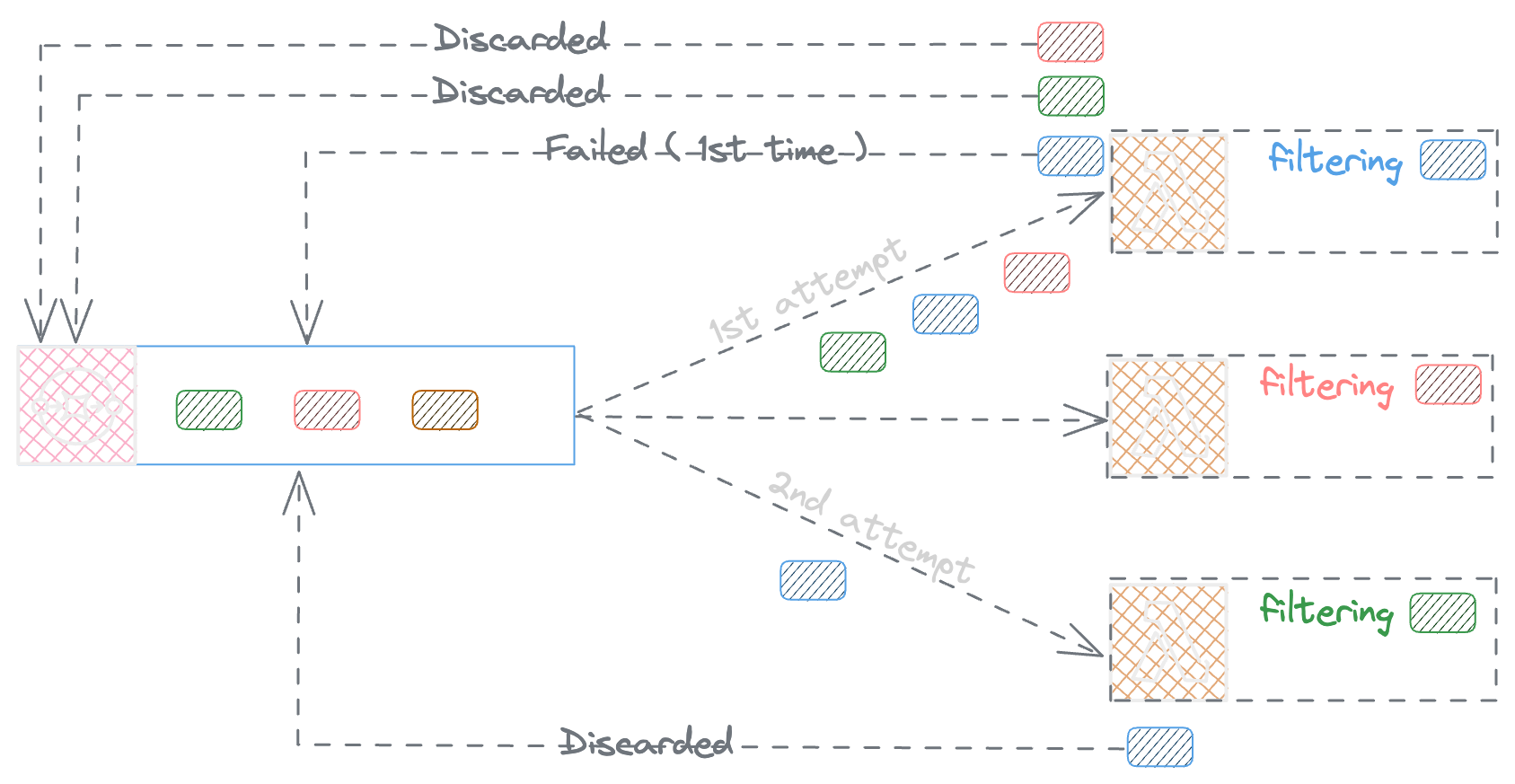

As the following diagram illustrates, the filtering will be applied on the Lambda service side, and if the configured filtering can be applied the record will be considered to be passed to the lambda function. But in the case that the configured filtering can not be applied to the record what happens is that the lambda service discards the message to be processed but also considers it as a message to be deleted and it will remove that message from SQS.

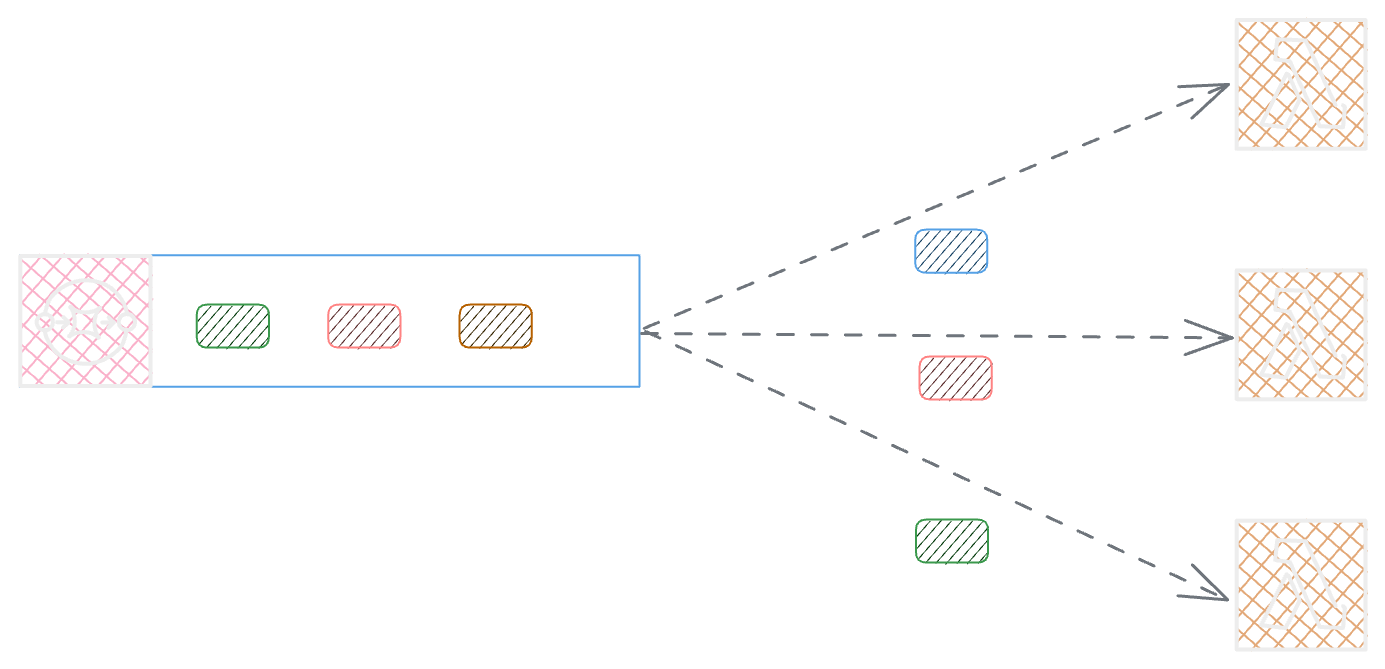

If you are a fan of reading documentation like me you already know this, but I had a mental challenge to see how the SQS would behave if I used the SQS as a central queue in my system, and my reason was that in some central part of the system we can offload the events to multiple lambda consumers.

Logically the idea was that if I applied the filtering as each lambda listens to a different event each message could have a single consumer and this would not be against the recommendation and that helps me have central control of my events without having a monolith function to handle a significant amount of process or doing some sort of lambda based orchestration. The following diagram demonstrates the exact scenario.

As part of my tests, I sent 3 different message with different payloads

{

"isProductTransitEvent": "true",

"productId": "123456",

"deliveryId": "1"

}

{

"isProductSynchroEvent": "true",

"productId": "123456",

"lotId": "12345"

}

{

"isProductStockEvent": "true",

"productId": "123456",

"quantity": 10

}

Only the first function received the messages, but sending more messages for 3rd payload results in randomly receiving messages but not all.

Another hypothesis was sending a single payload with different values for filtered fields, this time none of the second and third functions received their messages per filtering. trying to send 20 messages resulted in the same behavior as we experienced previously.

Lambda integration with SQS using Event source mapping at a high level can be presented as illustrated in the following sequence diagram, and the discarded events will be treated the same as successful messages.

The results ensure that the first trigger receives most of the time a large part of events, but what about if the records fail? what happens to the discarded events being part of the same batch of messages passed through the first trigger?

The answer is simple, In case of failure the discarded messages are deleted like in the success scenario, and the failed messages are retried, but the failed messages also after experiencing the error become visible after visibility timeout and will be reflected for another time, this leads potentially to loss the message in retry phase. as per the tests I did for this article, I never experienced a second retry for 10 messages.

Hope this can be useful ;)